The Legal AI Reality Check: What Carnegie Mellon’s Summit Revealed About Hype vs. Progress

Last week, on invitation from Manish Agnihorti, I joined 200 legal professionals, AI researchers, and legal operations leaders at Carnegie Mellon University for the Pittsburgh Legal AI Summit sponsored by Coheso. Walking into the event, I expected the usual mix of vendor enthusiasm and cautious optimism that characterizes most legal tech gatherings. What I got instead was something far more valuable: a brutally honest assessment of where legal AI actually stands in late 2025.

The event brought together an unusual mix of voices, from Professor Graham Neubig, whose AI agents power some of tomorrow’s legal tools, to Kyle Bahr, who went from practicing law for 15 years to becoming an AI Innovation Manager at an elite law firm in just 18 months. The collision of academic rigor and practitioner experience produced insights that every legal operations leader evaluating AI vendors needs to hear.

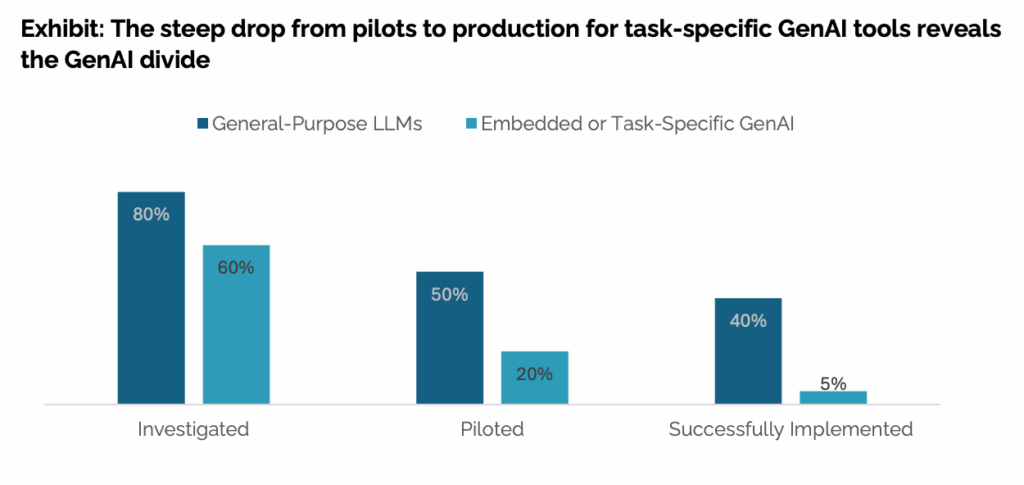

The 60-5 Problem: Why Legal AI Adoption Stalled

The most striking statistic came from MIT research presented during the summit: 60% of companies have investigated generative AI agents, 20% have piloted them, but only 5% have actually implemented them in production.

Let that sink in for a moment. In an industry where AI adoption among individual lawyers reportedly jumped from 2% to 90% in just two years, enterprise-wide deployment remains stuck in single digits.

This isn’t a technology problem. The AI works, sometimes brilliantly. Carnegie Mellon’s Matthew D’Emilio demonstrated how his contracts office now processes 2,000 software reviews annually using AI tools, handling 60-80% of the university’s $500 million in sourceable spend. Hannah Boss from Grove Collaborative shared how an internal bot reduced invoice review from eight hours per week to one hour per month.

The technology clearly delivers. So why aren’t more organizations deploying it?

The Accuracy Paradox: When Fluency Masks Reality

Professor Travis Breaux from CMU’s Computer Science department identified a problem that should concern every legal operations leader evaluating AI vendors: “Fluency is often mistaken for accuracy, and human trust is influenced by text quality.”

Current AI models are remarkably good at generating confident, well-written text. They’re far less reliable at getting the details right.

The benchmarks tell the story. The Agent Company benchmark, which tests AI systems on diverse, real-world tasks, shows that the best models currently solve 41% of tasks. Meanwhile, controlled software benchmarks report 75-80% accuracy, but researchers acknowledge these numbers “overestimate real-world impact.”

When I hear vendors claim their AI will automate contract review or replace legal expertise, I think back to Professor Neubig’s frank assessment: “System accuracy is estimated at 40% currently, and user education is at much lower levels.”

That gap between what AI sounds like it can do and what it actually can do is costing organizations millions in failed pilots and abandoned implementations.

The Integration Tax Nobody Talks About

Ashley Willoughby, who leads global legal operations at Coherent Corp, shared a story that resonated with every legal ops professional in the room. Coherent frequently acquires companies, and a recent acquisition “lacked AI and legal tech integration.” The M&A integration “disrupted existing CLM processes, requiring renegotiation and process redesign.”

This is the integration tax that vendor demos never show you.

The academic research backs this up. Professor Neubig’s work identifies three critical challenges facing AI adoption, in priority order:

- System accuracy and reliability

- Integration with existing systems

- User education and interaction design

Notice that two of the three have nothing to do with AI capability and everything to do with organizational readiness.

The practitioners on the legal operations panel drove this point home. When evaluating vendors, they started with 12 options. After requiring custom demos that reflected their actual workflows, not generic use cases, they narrowed the field to four. Then procurement added six months to the timeline.

One panelist put it perfectly: “The question isn’t whether AI works in legal, it’s whether your organization is ready to integrate it.”

Why Synthetic Data Should Worry You

Here’s a technical detail that matters more than most vendors want to discuss: the legal AI you’re evaluating is increasingly trained on synthetic data, AI-generated content used to train other AI systems.

Dr. Jaromir Savelka, a researcher at CMU and Vice President of the International Association for Artificial Intelligence and Law, explained the fundamental problem: “Lack of real domain-specific data limits AI model performance; synthetic data often leads to hallucinations.”

The challenge is unique to legal work. As Professor Kevin Ashley noted, there’s a “lack of data on attorney legal reasoning due to privilege and ethical constraints.” Unlike medicine, which has decades of structured, transparent data, law operates behind privilege protections that make it nearly impossible to build comprehensive training datasets.

Vendors are solving this problem by having AI systems generate their own training data. AI drafts contracts, other AI evaluates them, and the results train the next generation of models. It’s a closed loop that sounds efficient but risks amplifying errors rather than eliminating them.

When synthetic data works, it works well. But Professor Morgan Gray from the University of St. Thomas School of Law emphasized that “synthetic data generation showed limited variation and struggled to produce meaningful data from small seed corpora.”

If your vendor can’t explain where their training data comes from and how they validate accuracy, you’re flying blind.

The Amplification Principle: What Actually Works

Despite these challenges, legal AI is delivering remarkable results, just not through the automation story most vendors are selling.

Kyle Bahr’s journey is instructive. After 15 years practicing law in Washington, D.C. and Pittsburgh, he discovered AI in December 2022. By summer 2023, he had transitioned to building legal AI products. Today, as AI Innovation Manager at Cleary Gottlieb, he’s seen AI adoption among lawyers jump exponentially.

But here’s the crucial part: Bahr describes AI as “an amplification tool for litigators, not automation.” His team used AI to simulate a judge’s perspective, critique their arguments, and improve their litigation strategy in a federal court case. They secured summary judgment, with AI providing valuable feedback “including when ruling against user.”

This is the story that should guide your AI strategy: amplification, not automation.

Matthew D’Emilio from CMU echoed this principle: “AI augments but does not replace legal work; improves efficiency and quality.” His office handles 2,000 contract reviews annually not by eliminating lawyers but by giving them AI-powered tools that make them dramatically more effective.

The amplification principle has a corollary that vendors rarely discuss: “Generative AI amplifies both expertise and errors.” Give AI to an experienced lawyer with good judgment, and you’ll see remarkable productivity gains. Give the same tool to someone without domain expertise, and you’ll amplify their inexperience.

The De-skilling Risk: Why Implementation Design Matters

Professor Gray raised a concern that should influence how you deploy AI, not just whether you deploy it: “AI tools risk de-skilling professionals by automating core tasks.”

An MIT study found that students using LLMs to write essays showed lower brain activity and retention compared to those writing unaided. The implications for legal training are significant. If junior associates use AI to draft contracts without understanding the underlying legal reasoning, they may never develop the expertise that makes senior lawyers valuable.

The solution isn’t to avoid AI, the competitive pressure is too intense. Instead, law schools should “teach strategic, reflective AI use, not ban tools,” as Professor Gray recommended.

For legal operations leaders, this means thinking carefully about which tasks to automate and which to augment. Invoice processing from eight hours to one hour per month? Automate away. Contract drafting that teaches junior lawyers how to think about risk allocation? Augment, don’t replace.

What This Means for Your 2025-2026 Strategy

Walking out of CMU, I was struck by how different the reality of legal AI is from the story most vendors tell. The technology is genuinely transformative, but not in the ways most people expect.

Here’s what the research and practitioner experience suggest you should focus on:

Stop chasing automation, start building amplification strategies.

The organizations seeing real ROI aren’t the ones trying to replace lawyers with AI. They’re the ones giving experienced legal professionals tools that make them 4x more productive. Professor Neubig achieved exactly that productivity gain in his own work, not by automating his job away, but by augmenting his capabilities.

Demand realistic accuracy benchmarks, not demo perfection.

When a vendor shows you their system, ask about accuracy on real-world tasks, not controlled benchmarks. The 40% task completion rate on diverse, realistic tasks is your baseline, not the 75-80% you’ll see in optimized demos. And remember that “fluency is often mistaken for accuracy”, well-written text doesn’t mean correct analysis.

Budget for the integration tax.

The technology cost is the easy part. The hard part is integrating with your existing systems, training your team, managing change, and dealing with M&A disruption. The legal ops leaders at the summit were unanimous: integration and change management are harder than technology selection. Expect 6+ months for procurement alone if you’re at an enterprise organization.

Evaluate vendors on their data story.

Ask where the training data comes from. If the answer involves synthetic data, dig deeper. How do they validate that AI-generated training data doesn’t propagate errors? What real-world legal reasoning data do they have access to? The quality of training data will determine the quality of your results more than any algorithmic advancement.

Prioritize change management over features.

The gap between 60% investigation and 5% deployment isn’t about missing features, it’s about organizational readiness. The vendors that succeed long-term will be the ones that help you build stakeholder trust, develop internal champions, and manage the human side of AI adoption. Hannah Boss emphasized that “AI adoption required business stakeholder buy-in and focus on trust and compliance.” That’s not a nice-to-have; it’s the difference between a successful pilot and a failed implementation.

The Vendor Selection Framework That Actually Works

The legal operations panel shared a vendor selection process that cut through the noise. They started with 12 vendors and narrowed to four through a simple but powerful requirement: custom demos reflecting their actual workflows, not generic use cases.

This matters because AI performance varies dramatically based on your specific use case. A system that excels at NDA review may struggle with complex commercial agreements. One that handles software procurement beautifully may fail at catering contracts (CMU’s second-highest volume category with 1,300+ buyers).

The custom demo requirement forces vendors to prove they understand your specific integration challenges, data security requirements, and workflow constraints. It surfaces the integration tax early, before you’ve invested months in a pilot that was never going to scale.

Once you’ve narrowed the field, focus on these priorities:

- Integration capabilities with your existing legal tech stack, APIs matter more than UI polish

- Security and compliance features for FERPA, HIPAA, GDPR, and whatever regulations govern your industry

- Change management support that goes beyond user manuals

- Transparent accuracy metrics for your specific use cases

- Vendor stability in a rapidly evolving market

And here’s the contrarian advice: sometimes building beats buying. The organizations that saw the fastest ROI, like the invoice processing bot that saved eight hours per week, often built custom solutions for specific, high-volume workflows. You don’t need a comprehensive CLM platform to automate invoice review. Sometimes a focused tool beats a swiss army knife.

The Truth About Legal AI Software in 2025

Legal AI Software has moved past the hype cycle into something more interesting: selective, strategic deployment focused on amplification rather than automation.

The winners in this next phase won’t be the organizations that buy the most AI or deploy it the fastest. They’ll be the ones that:

- Set realistic expectations based on 40% task completion, not 90% demo perfection

- Focus on amplifying expert judgment rather than automating it away

- Invest as much in change management as in technology

- Understand the integration tax and budget accordingly

- Demand transparency about training data and accuracy metrics

Professor Neubig offered advice that applies to both vendors and buyers: “Combine quick workflow wins with long-term agent integration strategy.”

Find the eight-hour-per-week workflows you can reduce to one hour per month. Prove the value, build organizational trust, and develop the integration expertise you’ll need for more complex deployments. Then, and only then, tackle the comprehensive contract review systems and autonomous agents that vendors are eager to sell you.

The legal AI revolution is real. It’s just not the revolution most vendors are selling.

The question for your organization isn’t whether to adopt legal AI, the competitive pressure makes that decision for you. The question is whether you’ll learn from the organizations that are five years ahead, or whether you’ll repeat their expensive mistakes.

The practitioners and researchers at Carnegie Mellon have given us a roadmap. The organizations that follow it will dominate the next decade of legal operations. The ones that don’t will wonder why their AI pilots keep failing despite all the impressive demos.